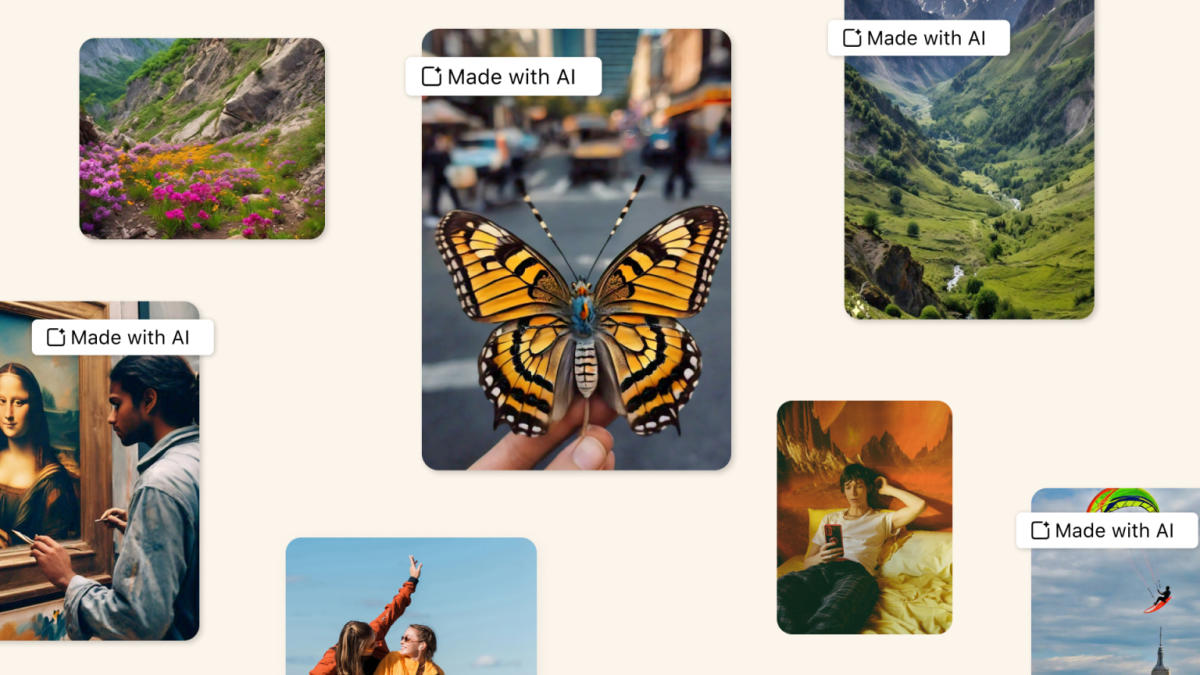

Meta says that its current approach to labeling AI-generated content is too narrow and that it will soon apply a “Made with AI” badge to a broader range of videos, audio and images. Starting in May, it will append the label to media when it detects industry-standard AI image indicators or when users acknowledge that they’re uploading AI-generated content. The company may also apply the label to posts that fact-checkers flag, though it’s likely to downrank content that’s been identified as false or altered.

The company announced the measure in the wake of an Oversight Board decision regarding a video that was maliciously edited to depict President Joe Biden touching his granddaughter inappropriately. The Oversight Board agreed with Meta’s decision not to take down the video from Facebook as it didn’t violate the company’s rules regarding manipulated media. However, the board suggested that Meta should “reconsider this policy quickly, given the number of elections in 2024.”

Meta says it agrees with the board’s “recommendation that providing transparency and additional context is now the better way to address manipulated media and avoid the risk of unnecessarily restricting freedom of speech, so we’ll keep this content on our platforms so we can add labels and context.” The company added that, in July, it will stop taking down content purely based on violations of its manipulated video policy. “This timeline gives people time to understand the self-disclosure process before we stop removing the smaller subset of manipulated media,” Meta’s vice president of content policy Monika Bickert wrote in a blog post.

Meta had been applying an “Imagined with AI” label to photorealistic images that users whip up using the Meta AI tool. The updated policy goes beyond the Oversight Board’s labeling recommendations, Meta says. “If we determine that digitally-created or altered images, video or audio create a particularly high risk of materially deceiving the public on a matter of importance, we may add a more prominent label so people have more information and context,” Bickert wrote.

While the company generally believes that transparency and allowing appropriately labeled AI-generated photos, images and audio to remain on its platforms is the best way forward, it will still delete material that breaks the rules. “We will remove content, regardless of whether it is created by AI or a person, if it violates our policies against voter interference, bullying and harassment, violence and incitement, or any other policy in our Community Standards,” Bickert noted.

The Oversight Board told Engadget in a statement that it was pleased Meta took its on board. It added that it would review the company’s implementation of them in a transparency report down the line.

“While it is always important to find ways to preserve freedom of expression while protecting against demonstrable offline harm, it is especially critical to do so in the context of such an important year for elections,” the board said. “As such, we are pleased that Meta will begin labeling a wider range of video, audio and image content as ‘Made with AI’ when they detect AI image indicators or when people indicate they have uploaded AI content. This will provide people with greater context and transparency for more types of manipulated media, while also removing posts which violate Meta’s rules in other ways.”

Update 4/5 12:55PM ET: Added comment from The Oversight Board.