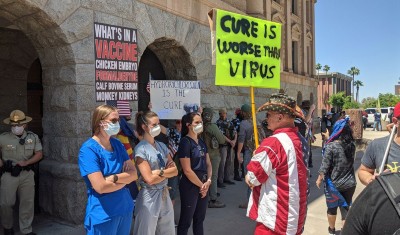

Conspiracy theories and disinformation spread rapidly during the COVID-19 pandemic. Credit: Hollie Adams/Getty

Researchers have shown that artificial intelligence (AI) could be a valuable tool in the fight against conspiracy theories, by designing a chatbot that can debunk false information and get people to question their thinking.

In a study published in Science on 12 September1, participants spent a few minutes interacting with the chatbot, which provided detailed responses and arguments, and experienced a shift in thinking that lasted for months. This result suggests that facts and evidence really can change people’s minds.

Tracking QAnon: how Trump turned conspiracy-theory research upside down

“This paper really challenged a lot of existing literature about us living in a post-truth society,” says Katherine FitzGerald, who researches conspiracy theories and misinformation at Queensland University of Technology in Brisbane, Australia.

Previous analyses have suggested that people are attracted to conspiracy theories because of a desire for safety and certainty in a turbulent world. But “what we found in this paper goes against that traditional explanation”, says study co-author Thomas Costello, a psychology researcher at American University in Washington DC. “One of the potentially cool applications of this research is you could use AI to debunk conspiracy theories in real life.”

Harmful ideas

Surveys suggest that around 50% of Americans put stock in a conspiracy theory — ranging from the 1969 Moon landing being faked to COVID-19 vaccines containing microchips that enable mass surveillance. The rise of social-media platforms that allow easy information sharing has aggravated the problem.

Although many conspiracies don’t have much societal impact, the ones that catch on can “cause some genuine harm”, says FitzGerald. She points to the attack on the US Capitol building on 6 January 2021 — which was partly driven by claims that the 2020 presidential election was rigged — and anti-vaccine rhetoric affecting COVID-19 vaccine uptake as examples.

It is possible to convince people to change what they think, but doing so can be time-consuming and draining — and the sheer number and variety of conspiracy theories make the issue difficult to address on a large scale. But Costello and his colleagues wanted to explore the potential of large language models (LLMs) — which can quickly process vast amounts of information and generate human-like responses — to tackle conspiracy theories. “They’ve been trained on the Internet, they know all the conspiracies and they know all the rebuttals, and so it seemed like a really natural fit,” says Costello.

Believe it or not

The researchers designed a custom chatbot using GPT-4 Turbo — the newest LLM from ChatGPT creator OpenAI, based in San Francisco, California — that was trained to argue convincingly against conspiracies. They then recruited more than 1,000 participants, whose demographics were quota-matched to the US census in terms of characteristics such as gender and ethnicity. Costello says that, by recruiting “people who have had different life experiences and are bringing in their own perspectives”, the team could assess the chatbot’s ability to debunk a variety of conspiracies.

The epic battle against coronavirus misinformation and conspiracy theories

Each participant was asked to describe a conspiracy theory, share why they thought it was true and express the strength of their conviction as a percentage. These details were shared with the chatbot, which then engaged in a conversation with the participant, in which it pointed to information and evidence that undermined or debunked the conspiracy and responded to the participant’s questions. The chatbot’s responses were thorough and detailed, often reaching hundreds of words. On average, each conversation lasted about 8 minutes.

The approach proved effective: participants’ self-rated confidence in their chosen conspiracy theory decreased by an average of 21% after interacting with the chatbot. And 25% of participants went from being confident about their thinking, having a score of more than 50%, to being uncertain. The shift was negligible for control groups, who spoke to the same chatbot for a similar length of time but on an unrelated topic. A follow-up survey two months later showed that the shift in perspective had persisted for many participants.

Although the results of the study are promising, the researchers note that the participants were paid survey respondents and might not be representative of people who are deeply entrenched in conspiracy theories.

Effective intervention

FitzGerald is excited by AI’s potential to combat conspiracies. “If we can have a way to intervene and stop offline violence from happening, then that’s always a good thing” she says. She suggests that follow-up studies could explore different metrics for assessing the chatbot’s effectiveness, or replicate the study using LLMs with less-advanced safety measures to make sure they don’t reinforce conspiratorial thinking.

Previous studies have raised concerns about the tendency of AI chatbots to ‘hallucinate’ false information. The study did take care to avoid this possibility — Costello’s team asked a professional fact-checker to assess the accuracy of information provided by the chatbot, who confirmed that none of its statements were false or politically biased.

Costello says that the team is planning further experiments to investigate different chatbot strategies, for example by testing what happens when the chatbot’s responses aren’t polite. They hope that by pinpointing “the experiments where the persuasion doesn’t work anymore”, they’ll learn more about what made this particular study so successful.